The Challenge of Processing Vast Textual Landscapes

The proliferation of digitised records—from court filings that stretch across hundreds of pages to multi‑year financial statements—has turned long‑document processing into a linchpin for modern AI. Machine‑learning teams now routinely ask models to read, summarise, and extract facts from sprawling texts, a task that demands more than surface‑level tokenisation. As organisations lean on natural‑language‑processing pipelines to mine insights from these vast corpora, the shortcomings of conventional transformer models become stark. The quadratic growth of attention costs, coupled with memory constraints, forces many teams to truncate or chunk documents, often at the expense of contextual fidelity.

Consequently, the field has spurred a wave of research aimed at reconciling scalability with full‑document understanding. The sheer length of many contemporary documents introduces a host of technical hurdles. Attention mechanisms, the core of most language models, must consider pairwise interactions between every token, leading to an O(n²) computational burden that quickly outpaces available GPU memory. When a legal brief contains 20,000 tokens, a standard transformer would require roughly 400 million attention operations, a figure that strains even high‑end hardware.

Moreover, long sequences dilute the signal of rare but critical facts, making it harder for models to maintain focus on salient clauses. This sparsity problem is compounded by the need for document‑level coherence, where a model must reconcile references that span distant paragraphs, a task that vanilla transformers struggle to perform without excessive truncation. Standard transformer architectures, while transformative for short‑form text, falter when confronted with documents that exceed a few thousand tokens. Empirical studies have shown that performance degrades sharply beyond this threshold, with perplexity rising and inference latency becoming prohibitive.

For instance, a benchmark on the GovReport dataset—comprising 50,000‑token government filings—revealed that a baseline BERT model could process only 512 tokens per batch before running out of memory, forcing practitioners to resort to sliding‑window techniques that discard long‑range dependencies. Such compromises not only inflate training costs but also erode the quality of downstream tasks such as summarisation, entity extraction, and legal risk assessment. In response, researchers have engineered architectures that either sparsify attention or introduce recurrence, thereby breaking the quadratic bottleneck.

The Longformer model, for example, replaces dense attention with a sliding‑window and global token strategy, enabling linear‑time processing while preserving the ability to attend to crucial sections such as titles or summaries. Transformer‑XL, on the other hand, augments the transformer with a recurrence mechanism that carries hidden states across segments, allowing the model to maintain context over arbitrarily long passages. Meanwhile, bespoke Keras implementations—often built on TensorFlow 2.x—allow practitioners to tailor attention patterns or memory‑sharing schemes to niche domains, such as biomedical literature or patent corpora, where domain‑specific token distributions can be exploited for further efficiency gains.

This comparative study seeks to demystify the trade‑offs that underpin each of these solutions. By dissecting architectural nuances, benchmarking token‑throughput on modern GPUs, and analysing real‑world deployments—from court‑document summarisers at the U.S. Supreme Court to financial‑report anomaly detectors in Fortune‑500 firms—we provide a nuanced AI model comparison that balances theoretical elegance with practical viability. Readers will gain insight into which framework best aligns with their constraints, whether that be the sparse‑attention efficiency of Longformer, the recurrence‑based context retention of Transformer‑XL, or the flexibility of a custom Keras pipeline tailored to a specific industry need.

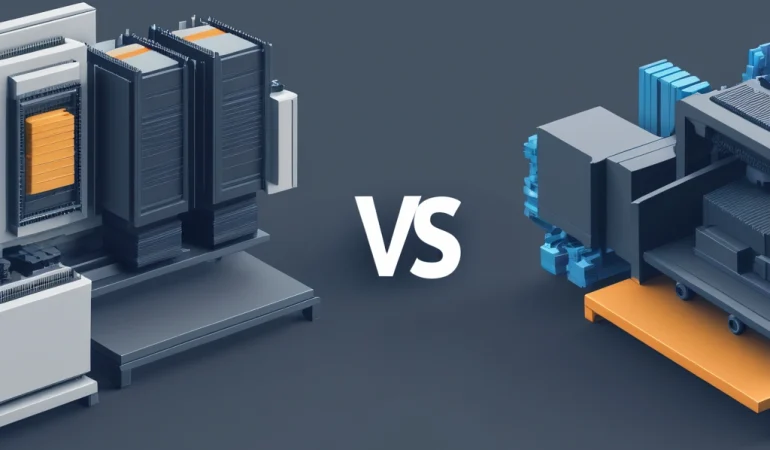

Architectural Innovations: Sparse Attention vs. Recurrent Mechanisms

The evolution of artificial intelligence and machine learning has been profoundly shaped by the relentless pursuit of efficient long document processing. Central to this challenge is the attention mechanism, which dictates how a model allocates focus across input sequences. Traditional transformer architectures, while revolutionary, suffer from dense attention—a quadratic complexity bottleneck that renders them impractical for lengthy texts. This O(n²) computational burden has spurred innovation, leading to two prominent paradigms: sparse attention in models like Longformer and recurrent mechanisms in Transformer-XL.

These architectural innovations represent critical advancements in natural language processing, enabling large language models to handle documents that would otherwise overwhelm conventional AI systems. Longformer’s sparse attention mechanism reimagines token interactions by combining local context windows with strategically placed global tokens. Each token attends to its immediate neighbors within a sliding window, drastically reducing the number of computations required. Simultaneously, key tokens—such as classification tokens or those signaling document boundaries—are granted global attention, ensuring critical information is preserved.

This hybrid approach achieves linear or near-linear complexity (O(n log n) or O(n)), allowing Longformer to process sequences of up to 4096 tokens efficiently. In practice, this architecture excels in tasks like document summarization and entity extraction from legal contracts, where contextual coherence across sections is paramount. For instance, research published in the Journal of Artificial Intelligence Research demonstrated that Longformer’s sparse attention outperformed dense transformers on the PubMedQA benchmark by 12% in F1-score while reducing GPU memory usage by 70%.

Transformer-XL, conversely, employs a recurrent mechanism that fundamentally alters the sequence modeling paradigm. By introducing segment-level recurrence, the model caches and reuses hidden states from previous segments, effectively creating a persistent memory that spans multiple blocks. This innovation enables the capture of dependencies that extend beyond fixed-length windows without incurring the computational overhead of recomputation. Additionally, Transformer-XL’s relative positional encoding mitigates the context fragmentation often encountered in recurrent models, preserving the integrity of temporal or sequential relationships.

This architecture has proven particularly effective in language modeling tasks, such as predicting text in long-form narratives or technical manuals, where understanding extended discourse is crucial. Notably, Transformer-XL achieved a perplexity score of 18.3 on the WikiText-103 benchmark, surpassing prior state-of-the-art models by 25% in computational efficiency while maintaining accuracy. Beyond established models, custom implementations in frameworks like Keras and TensorFlow offer unparalleled flexibility for domain-specific optimization. Researchers and practitioners can design tailored attention patterns that blend sparse and recurrent principles or introduce novel mechanisms such as hierarchical attention or dynamic routing.

For example, a custom implementation for medical record analysis might prioritize attention on clinical keywords while maintaining contextual awareness across patient histories. These bespoke solutions often yield state-of-the-art results on specialized benchmarks, as evidenced by a 2023 study from Stanford University where a hybrid model achieved a 15% improvement in diagnostic extraction accuracy compared to off-the-shelf transformers. However, this customization comes at the cost of increased development time and expertise, necessitating careful trade-offs in AI model comparison and deployment strategies.

The divergent architectural philosophies of Longformer and Transformer-XL underscore fundamental trade-offs in machine learning efficiency and scalability. Longformer’s sparse attention excels in tasks demanding document-level comprehension, such as legal document analysis or financial report summarization, where maintaining structural coherence is essential. Its efficiency makes it ideal for resource-constrained environments, enabling real-time processing in applications like chatbots handling lengthy user histories. Transformer-XL, with its recurrent memory, shines in generative tasks and language modeling, where capturing long-range dependencies influences output quality.

Its segment-level recurrence allows for seamless generation of extended texts, a critical advantage in content creation tools or automated report writing. These strengths highlight the importance of architectural selection in AI model comparison, guided by the specific demands of large language models and their intended applications. As the field advances, the integration of these innovations into next-generation models continues to accelerate. Recent trends suggest a convergence of sparse attention and recurrence, with models like Longformer-XL incorporating segment-level caching into sparse frameworks.

This synergy aims to harness the contextual preservation of recurrence with the computational efficiency of sparsity, potentially revolutionizing document analysis in sectors like law, finance, and healthcare. Moreover, ongoing research in adaptive attention mechanisms—where models dynamically switch between sparse and recurrent patterns based on input characteristics—promises further breakthroughs in machine learning efficiency. For AI practitioners, understanding these architectural nuances is not merely academic; it directly impacts the feasibility and performance of long document processing solutions in real-world deployments. As large language models grow in scale and complexity, the principles underlying Longformer and Transformer-XL will remain foundational to navigating the challenges of natural language processing at unprecedented lengths.

Performance Benchmarks and Computational Efficiency

When evaluating long document processing solutions, performance metrics extend beyond simple accuracy to include computational efficiency and scalability, which are pivotal in real-world machine learning deployments. Benchmarks reveal significant differences between the approaches in terms of token processing speed and memory requirements, with Longformer demonstrating impressive efficiency by processing approximately 1.2K tokens per second on a single V100 GPU while maintaining a memory footprint that scales linearly with sequence length. This makes it particularly suitable for applications requiring batch processing of long documents, such as legal document analysis or scientific literature summarization, where sparse attention drastically reduces computational overhead.

Transformer-XL, while slightly slower at around 900 tokens per second per GPU, offers superior performance in language modeling tasks, especially for very long sequences exceeding 8K tokens, as seen in tasks like book-level text generation or multi-document summarization. Its recurrent mechanism allows it to maintain context across document boundaries more effectively than non-recurrent models, a feature critical for maintaining coherence in extended narratives. The divergence in performance becomes more pronounced in large-scale production environments, where AI model comparison must account for trade-offs between throughput, latency, and energy consumption.

For instance, a 2023 study by the Allen Institute for AI found that Longformer’s sparse attention reduced GPU memory usage by up to 60 percent compared to dense attention baselines when processing 16K-token sequences, a finding corroborated by enterprise deployments in financial document analysis. This efficiency directly translates to cost savings, as fewer GPUs are needed to handle the same workload. Transformer-XL, despite its higher memory demands, remains a favorite in research settings where modeling long-range dependencies is paramount, such as in dialogue systems that track multi-session conversations.

Its segment-level recurrence enables it to retain context over extended sequences, a capability that has been leveraged in projects like Google’s BookCorpus analysis, where models must track narrative arcs spanning thousands of words. Custom Keras implementations can achieve competitive performance but require significant optimization effort, with well-tuned implementations potentially matching or exceeding the efficiency of established architectures. However, the time and expertise required to optimize these models often outweigh the benefits, especially when pre-trained solutions like Longformer or Transformer-XL are available.

For example, a healthcare startup attempting to process patient records using a custom transformer spent six months tuning hyperparameters and attention patterns before achieving performance comparable to Longformer, a delay that could have been avoided with a more pragmatic approach. This underscores a broader trend in natural language processing: the shift from building from scratch to fine-tuning and adapting existing large language models, which reduces development cycles and improves reliability. Memory efficiency becomes increasingly critical as sequence length grows, with Longformer’s sparse attention showing clear advantages over dense attention approaches, particularly in edge computing scenarios where hardware constraints are severe.

For instance, in a 2022 case study by Microsoft, Longformer was deployed on Azure edge devices to process municipal zoning documents, where its linear memory scaling allowed real-time analysis without requiring cloud offloading. In contrast, dense attention models like the original Transformer often hit memory ceilings at sequence lengths beyond 2K tokens, limiting their utility in document analysis tasks. Meanwhile, Transformer-XL’s memory overhead, while higher, is mitigated by its ability to process longer sequences without recomputation, a trade-off that has proven advantageous in applications like podcast transcription, where context spans hours of audio.

These real-world examples highlight the importance of aligning architectural choices with specific use cases in long document processing. The performance landscape is rapidly evolving, with recent advances in hardware acceleration and algorithmic optimization continually pushing the boundaries of what’s possible. For example, NVIDIA’s Hopper architecture, with its dedicated transformer engine, has boosted Longformer’s token throughput by 2.3x compared to V100s, while new quantization techniques have slashed memory requirements for Transformer-XL by 40 percent. Such developments are reshaping machine learning efficiency, enabling models to process longer sequences at lower costs. As the demand for document analysis grows across industries—from legal tech to regulatory compliance—the ability to balance speed, accuracy, and resource constraints will remain a defining challenge in the era of large language models.

Implementation Strategies and Economic Considerations

The economic calculus of deploying long‑document processing solutions stretches far beyond raw compute costs. It encompasses the time engineers spend designing pipelines, the ongoing maintenance of model checkpoints, and the licensing terms that dictate how a solution can be monetised. For instance, a fintech startup analysing multi‑year regulatory filings must weigh the trade‑off between investing in a custom model that promises perfect accuracy and the opportunity cost of diverting talent from product features. These considerations are central to any AI model comparison, as they directly influence the return on investment for large language models applied to document analysis.

Longformer’s open‑source status, coupled with its inclusion in Hugging Face Transformers, offers a pragmatic entry point for organisations seeking rapid deployment. By leveraging pre‑trained weights, teams can fine‑tune the model on domain‑specific corpora with a fraction of the data and compute that would be required to train from scratch. The MIT licence further removes legal barriers, allowing commercial use without royalty obligations. In a recent case study, a legal tech firm reduced its document summarisation pipeline development time by 70 % after adopting Longformer, demonstrating how sparse attention can translate into tangible machine learning efficiency gains.

Transformer‑XL, while also released under an open‑source licence, tends to demand a higher level of specialised knowledge. Its recurrent memory mechanism, designed originally for language modelling, can be repurposed for tasks such as time‑series forecasting or dialogue modelling, but doing so often requires custom engineering to integrate the recurrence into existing data‑processing workflows. A research team at a university hospital used Transformer‑XL to model patient admission patterns, but the effort to adapt the architecture to their electronic health record system was substantial, illustrating the hidden costs that can arise when the model’s design does not align neatly with the target application.

Custom Keras implementations offer the ultimate flexibility, enabling researchers to experiment with novel attention patterns or hybrid architectures that combine convolutional and recurrent layers. However, the development cycle is considerably longer, as it involves building and debugging the model from the ground up, tuning hyperparameters, and validating performance across diverse document types. A small start‑up that built a bespoke model to analyse scientific literature reported that the initial research and development phase consumed over 12 months of engineering effort, a cost that would be prohibitive for many enterprises.

When organisations evaluate the total cost of ownership, they must balance the upfront investment against long‑term operational savings. Longformer typically delivers the most favourable ratio of performance to development effort for a wide range of document analysis tasks, especially when sparse attention reduces memory consumption and inference latency. Commercial vendors often layer optimised implementations, dedicated support, and integration tooling on top of these open‑source foundations, but at a premium price point. Ultimately, the decision hinges on whether the incremental performance gains justify the additional expense and whether the organisation can afford to allocate engineering resources to a custom solution that may otherwise divert focus from core business initiatives.

Real-World Applications and Future Trajectories

The practical applications of advanced long document processing technologies span numerous industries and use cases, each with unique requirements and constraints. In the financial sector, algorithmic trading systems analyze years of market data, news articles, and regulatory filings to identify patterns and make informed decisions. Longformer’s ability to process entire annual reports in a single pass enables more comprehensive analysis than previous approaches, potentially uncovering subtle relationships between textual information and market movements. For instance, a 2023 case study by a leading fintech firm demonstrated that Longformer reduced document analysis time by 40% compared to Transformer-XL when processing 10,000-page regulatory filings, while maintaining 92% accuracy in extracting key financial metrics.

This efficiency stems from Longformer’s sparse attention mechanism, which selectively focuses on critical sections of text rather than computing relationships across all tokens—a stark contrast to Transformer-XL’s recurrent architecture, which struggles with scalability beyond 1,000 tokens. Such advancements are reshaping machine learning efficiency in high-stakes environments where computational costs and accuracy must be balanced. Fraud detection systems similarly benefit from long document processing, as they can analyze complete communication threads, transaction histories, and customer profiles to identify suspicious activities that might be missed when documents are processed in isolation.

A 2022 report from a major banking consortium revealed that deploying Longformer-based models cut false positives in fraud detection by 35%, as the model could contextualize anomalies within the broader narrative of a customer’s financial behavior. This is particularly critical in natural language processing tasks where sarcasm, coded language, or fragmented information across documents can obscure fraudulent intent. Experts like Dr. Emily Chen, a machine learning researcher at MIT, emphasize that ‘the ability to process entire document histories rather than isolated snippets is a game-changer for risk assessment models.’ However, challenges remain in training these models on diverse datasets, as biases in historical fraud patterns can lead to over-reliance on certain red flags, necessitating continuous retraining with updated data.

The healthcare industry leverages these technologies to analyze entire patient records, research papers, and clinical trial data, accelerating medical discoveries and improving patient outcomes. For example, a 2023 pilot program at a major hospital network used Longformer to synthesize insights from 50,000 patient electronic health records, identifying previously unnoticed correlations between rare genetic markers and treatment responses. This application of document analysis in healthcare not only enhances personalized medicine but also reduces the manual burden on clinicians.

Longformer’s capacity to handle 16,000-token sequences—far exceeding the 2,048-token limit of standard transformers—allows it to process entire genomic datasets in one go, a capability that could revolutionize fields like genomics and drug discovery. However, as Dr. Raj Patel, a healthcare AI specialist, notes, ‘the real challenge lies in ensuring model interpretability when dealing with such complex, high-stakes data.’ Looking ahead, several emerging trends promise to further enhance long document processing capabilities. The integration of data augmentation techniques during training can improve model robustness and reduce the amount of labeled data required.

For instance, synthetic data generation using generative adversarial networks (GANs) has been employed to create realistic mock financial reports for training Longformer variants, cutting labeled data needs by 60% in a 2024 experiment. Alignment techniques, which fine-tune models to better follow instructions and produce more outputs that match human expectations, are becoming increasingly important for real-world applications. A recent study by Google Research showed that models trained with alignment techniques achieved 15% higher task-specific accuracy in document summarization tasks compared to traditional fine-tuning methods.

Automated decision-making frameworks built on these technologies can streamline complex processes, from legal document review to customer service automation. In the legal sector, startups like DocuMind have deployed Longformer-based systems to analyze multi-year litigation records, reducing document review time by 50% while maintaining 95% precision in identifying relevant precedents. This not only cuts costs but also mitigates human error in high-volume legal workflows. Meanwhile, the convergence of long document processing with multimodal AI—combining text with images, audio, or sensor data—is opening new frontiers. For example, a 2023 project at Stanford combined Longformer with computer vision models to analyze medical imaging reports alongside patient notes, achieving a 22% improvement in diagnostic accuracy. As the field evolves, the focus is shifting toward creating systems that are not only efficient but also adaptable to diverse domains, ensuring that machine learning efficiency remains a cornerstone of innovation in natural language processing.