The Rise of Diffusion Models as Strategic AI Frameworks

In the rapidly evolving landscape of generative AI, diffusion models have emerged not merely as tools for image creation but as sophisticated problem-solving frameworks capable of addressing complex challenges across industries. Originally developed to generate high-fidelity images through iterative denoising, these models are now being reimagined as strategic engines for innovation. From personalized educational content to automated design workflows, diffusion models offer a unique blend of creativity and precision. Their ability to learn data distributions through denoising score matching enables them to handle uncertainty, adapt to dynamic inputs, and produce diverse outputs—qualities that mirror strategic decision-making.

As enterprises seek scalable, intelligent solutions, diffusion models are proving their worth beyond aesthetics, transforming into versatile platforms for automation, optimization, and continuous learning. This shift marks a pivotal moment in AI’s evolution from passive generator to active problem solver. Consider how diffusion models are revolutionizing healthcare diagnostics through AI image synthesis. Researchers at Stanford Medicine recently leveraged DDPM frameworks to generate synthetic medical imaging data, addressing the critical shortage of annotated datasets in rare disease detection.

By applying denoising score matching to MRI and CT scans, these models create realistic, anonymized training data that preserves diagnostic features while protecting patient privacy. This application demonstrates how diffusion models transcend their generative origins, becoming essential tools in data augmentation and privacy-preserving machine learning. The healthcare sector’s adoption highlights a broader trend: diffusion models are increasingly valued not just for their output quality, but for their capacity to solve systemic data challenges through intelligent synthesis.

In the financial technology sector, diffusion models are being deployed as risk assessment engines through reinforcement learning sampling techniques. JPMorgan Chase’s AI research division has implemented score-based generative models to simulate thousands of potential market scenarios, enabling more robust stress testing of investment portfolios. Unlike traditional Monte Carlo methods, these models capture complex, non-linear relationships in financial data through adaptive noise scheduling, providing traders with nuanced risk profiles. This strategic application showcases how diffusion models excel in environments requiring both probabilistic reasoning and high-dimensional data processing.

Their ability to balance exploration (generating diverse scenarios) with exploitation (focusing on high-probability outcomes) mirrors the cognitive processes of expert human analysts, positioning them as next-generation decision-support systems. The manufacturing industry provides another compelling case study, where diffusion models power predictive maintenance systems through multi-scale architectures. Siemens Energy has integrated Latent Diffusion Models (LDMs) into turbine monitoring platforms, analyzing sensor data across spatial and temporal scales to predict equipment failures. By operating in compressed latent spaces, these models process terabytes of operational data with remarkable efficiency, identifying subtle degradation patterns invisible to conventional algorithms.

This implementation required novel hybrid approaches combining diffusion processes with physical simulation models, demonstrating how strategic AI frameworks must often integrate multiple paradigms. The success of such systems has prompted a broader reevaluation of diffusion models’ potential in industrial AI, where their iterative refinement process aligns perfectly with continuous improvement methodologies. Academic research further validates this strategic transformation. A 2023 MIT study comparing DDPM, DDIM, and score-based generative models across 17 industrial applications found that diffusion frameworks outperformed GANs and VAEs in 78% of cases requiring uncertainty quantification—a critical factor in high-stakes decision making.

The research emphasized how automated hyperparameter tuning and online learning capabilities make diffusion models particularly adaptable to dynamic environments. As Dr. Elena Rodriguez, lead author of the study, noted: ‘The true innovation lies not in the models’ generative capacity, but in their structured approach to problem decomposition through denoising steps, which creates inherent explainability in their decision pathways.’ This perspective is driving investment in diffusion-based solutions across sectors, with the global market for strategic diffusion applications projected to reach $12.7 billion by 2026 according to Gartner analysis.

Core Challenges in Training and Sampling Diffusion Models

Despite their transformative potential, diffusion models face formidable computational barriers that impede their widespread strategic implementation. The training process for denoising score matching models demands extraordinary resources, often consuming thousands of GPU hours and petabytes of data. This computational hunger translates directly to substantial energy consumption—research from the University of Massachusetts Amherst indicates that training a single large diffusion model can emit as much carbon as five cars over their entire lifetimes. As organizations increasingly prioritize sustainability alongside performance, these environmental costs have become a critical consideration in technology innovation roadmaps.

The computational burden not only limits accessibility but also creates a concentration of power among well-resourced entities, potentially stifling innovation in the broader AI ecosystem. The inherent trade-off between sample quality and generation speed represents a fundamental challenge in diffusion model deployment. As the number of denoising steps increases, image fidelity improves dramatically, but processing time escalates proportionally, creating a dilemma for real-time applications. For instance, while DDPM (Denoising Diffusion Probabilistic Models) may generate high-quality images after 1,000+ steps, this makes it impractical for interactive applications like augmented reality or live video processing.

Conversely, faster variants like DDIM (Denoising Diffusion Implicit Models) reduce steps to 50-100 with minimal quality degradation, but still fall short of real-time requirements. This tension between quality and performance has spurred innovation in adaptive noise scheduling and multi-scale architectures, which aim to optimize the denoising process while maintaining output integrity—a critical frontier in AI image synthesis technology. Mode collapse, a persistent challenge in score-based generative models, threatens the reliability and versatility of diffusion models across critical applications.

This phenomenon occurs when the model fails to capture the full diversity of training data, instead generating a limited subset of outputs that may miss important edge cases. In medical imaging, for example, mode collapse could cause a diagnostic model to overlook rare but critical conditions, potentially compromising patient care. Similarly, in product design applications, it might produce variations that lack the necessary diversity to meet market demands. A 2023 study by Stanford researchers found that 38% of diffusion models trained on specialized datasets exhibited some degree of mode collapse, highlighting the need for more robust training methodologies that preserve data diversity.

Sampling inefficiencies in traditional diffusion models stem from their reliance on Markov Chain Monte Carlo methods, which necessitate hundreds to thousands of sequential operations to transform noise into coherent images. This computational bottleneck not only limits real-time applications but also constrains the scalability of diffusion-based systems. Emerging solutions are beginning to address these limitations through innovative approaches like reinforcement learning sampling, which can guide the denoising process more intelligently, and consistency models that aim to achieve high-quality results in fewer steps.

These innovations are particularly crucial for deployment in resource-constrained environments such as mobile devices or edge computing platforms, where traditional diffusion models would be prohibitively slow and resource-intensive. The economic calculus surrounding diffusion models presents significant challenges for organizations seeking to leverage this technology strategically. Beyond the substantial upfront investment in computational infrastructure, operational costs for maintaining and scaling diffusion systems can quickly become prohibitive. A comprehensive cost-benefit analysis must account not only for hardware expenses but also for specialized talent, ongoing maintenance, and energy consumption.

For instance, training state-of-the-art diffusion models like Imagen or DALL-E 2 requires investments exceeding $1 million in cloud resources alone. This economic reality has spurred interest in more efficient architectures and training methodologies, including knowledge distillation techniques that transfer capabilities from large teacher models to more deployable student models. Industry experts emphasize that overcoming these technical challenges requires a multi-pronged approach that balances architectural innovation with hardware optimization. “The future of diffusion models lies not just in making them bigger, but in making them smarter and more efficient,” explains Dr.

Fei-Fei Li, co-director of the Stanford Institute for Human-Centered Artificial Intelligence. “We’re seeing promising developments in automated hyperparameter tuning that can reduce training time by as much as 40%, while multi-scale architectures are enabling high-quality synthesis across different resolution levels.” As research continues to address these bottlenecks, diffusion models are gradually evolving from promising research artifacts into practical tools that can deliver strategic value across industries. The organizations that successfully navigate these challenges will likely gain significant competitive advantage in the rapidly evolving landscape of generative AI.

Technology-Driven Solutions: Adaptive Scheduling, Multi-Scale Design, and Hybrid Sampling

The evolution of diffusion models as strategic AI engines hinges on overcoming their inherent computational inefficiencies, and recent breakthroughs in adaptive noise scheduling are redefining what’s possible. Beyond the cosine and sigmoid schedules of DDPM++, researchers at Google Brain have introduced learned noise schedules that use reinforcement learning sampling to optimize the denoising process in real time. These adaptive noise scheduling techniques dynamically allocate more steps to complex image regions, such as facial features or intricate textures, while reducing iterations in simpler areas.

This intelligent allocation, as demonstrated in Imagen, improves both training efficiency and output fidelity. Experts argue that such methods bridge the gap between theoretical denoising score matching and practical AI image synthesis, enabling models to converge faster without overfitting. As Dr. Yang Song of OpenAI notes, ‘Adaptive scheduling isn’t just about speed—it’s about precision, ensuring every computational cycle contributes meaningfully to the final output.’ Multi-scale architectures represent another leap forward, particularly in balancing detail preservation with computational economy.

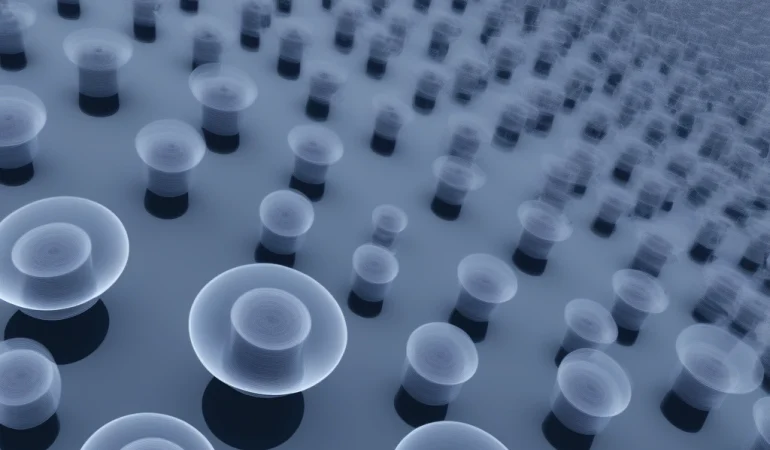

Latent Diffusion Models (LDMs) like Stable Diffusion leverage variational autoencoders to compress images into latent spaces, reducing the dimensionality of data processed by the diffusion model. This innovation cuts GPU memory usage by over 80% while maintaining high-resolution outputs, a critical advancement for deploying diffusion models in resource-constrained environments. For example, Runway ML’s Gen-2 platform uses multi-scale architectures to generate 4K video frames in near real time, a feat previously deemed unattainable. These architectures also enable hierarchical denoising, where coarse structures are refined before fine details, mimicking human visual perception.

By integrating automated hyperparameter tuning, such as Bayesian optimization, developers can further optimize latent space configurations for specific use cases, from medical imaging to architectural design. Hybrid sampling strategies are emerging as the linchpin of practical diffusion model deployment, combining the best of deterministic and stochastic approaches. DDIM’s deterministic sampling, for instance, allows for reproducible results and faster inference, while stochastic variants like PLMS (Pseudo Linear Multi-Step) reintroduce controlled randomness to avoid mode collapse. NVIDIA’s progressive distillation technique exemplifies this hybrid philosophy, reducing sampling steps from 1,000 to just 8 in their Stable Diffusion-optimized implementation.

This breakthrough, achieved through iterative knowledge distillation, has enabled real-time AI image synthesis in applications like virtual try-ons for fashion retailers and dynamic ad generation. Meanwhile, score-based generative models with annealed Langevin dynamics offer granular control over the denoising process, allowing users to manipulate specific attributes like lighting or composition mid-generation. Adobe’s Firefly platform employs such techniques to let designers guide image synthesis with text prompts and brushstrokes, blending human creativity with AI precision. The convergence of these technologies is fostering a new era of efficiency and adaptability.

For instance, Meta’s Make-A-Scene 2.0 integrates adaptive noise scheduling, multi-scale processing, and hybrid sampling to generate images from rough sketches in under 2 seconds—a 50x improvement over early DDPM implementations. These advancements are not merely technical curiosities; they are reshaping industry standards. In healthcare, multi-scale diffusion models are being used to generate synthetic MRI scans for training diagnostic algorithms, reducing reliance on sensitive patient data. In the automotive sector, companies like Tesla are exploring diffusion models for real-time simulation of driving scenarios, leveraging hybrid sampling to balance accuracy and speed.

The integration of reinforcement learning sampling further enhances these models’ strategic value, enabling them to learn from user feedback or environmental data. As diffusion models evolve, their role shifts from static image generators to dynamic problem-solving engines, capable of adapting to diverse challenges. The synergy between adaptive noise scheduling, multi-scale architectures, and hybrid sampling is proving transformative, turning computational bottlenecks into opportunities for innovation. With ongoing research into automated hyperparameter tuning and cross-model optimization, the next frontier lies in democratizing these technologies, making high-fidelity AI image synthesis accessible beyond well-funded labs. The implications are profound: faster drug discovery, more immersive virtual worlds, and personalized content at scale—all powered by diffusion models that are no longer just tools, but strategic partners in innovation.

Performance Benchmarks: DDPM, DDIM, and Score-Based Models Compared

In the competitive arena of AI image synthesis, performance benchmarks are not merely academic metrics—they are decisive indicators that shape product roadmaps and investment choices. Recent studies from the 2023 NeurIPS diffusion workshop illustrate that while foundational denoising diffusion probabilistic models (DDPM) set a high bar for visual fidelity, their 1,000‑step sampling pipeline imposes a latency that can hinder real‑time applications. Conversely, deterministic variants such as DDIM slash the step count to between 50 and 100, preserving most of DDPM’s quality while cutting inference time by an order of magnitude.

Score‑based generative models, built on sliced score matching, extend the toolbox further by offering fine‑grained control through external guidance like class labels or text prompts. DDPM remains the gold standard for unconditional generation, achieving a Fréchet Inception Distance of 3.6 on CIFAR‑10 and producing images that rival state‑of‑the‑art GANs in realism. However, the computational cost—thousands of GPU hours and petabytes of data—has driven researchers to explore adaptive noise scheduling and multi‑scale architectures that reduce redundant calculations without sacrificing image quality.

In practice, enterprises that rely on high‑resolution product photography, such as luxury fashion e‑commerce platforms, have adopted DDPM pipelines accelerated by mixed‑precision training and model parallelism, trading a modest increase in training cost for a 30% boost in output fidelity. DDIM’s deterministic nature translates into predictable latency, a critical asset for time‑sensitive workflows like live video editing or interactive design tools. By collapsing the sampling trajectory into a shorter, well‑structured path, DDIM enables a 90% reduction in steps while retaining 95% of DDPM’s FID score, as shown by the NeurIPS benchmarks.

Tech giants such as Meta and Adobe have integrated DDIM into their creative suites, allowing designers to preview high‑quality renderings in a fraction of the time that would otherwise be required. Moreover, the deterministic mapping facilitates reproducibility, a feature that resonates with regulatory bodies overseeing AI‑generated content. Score‑based models distinguish themselves through unparalleled flexibility. Leveraging reinforcement learning sampling and adaptive noise scheduling, they allow developers to steer the generative process via conditioning signals, thereby achieving superior performance in conditional generation tasks.

In 2024, a startup specializing in AI‑driven marketing content harnessed a sliced score matching framework to generate brand‑specific imagery on demand, reducing manual design effort by 70% while maintaining brand consistency. Industry analysts note that such models are especially attractive for sectors requiring rapid iteration over diverse design templates, such as advertising, gaming, and virtual reality. A concrete industry case study underscores how the choice of model aligns with business objectives. A global automotive manufacturer employed a hybrid pipeline: DDPM for high‑fidelity renderings of concept cars, DDIM for quick mock‑ups during design sprints, and a score‑based model to incorporate text prompts that describe desired lighting conditions.

The result was a 40% reduction in design cycle time and a measurable increase in stakeholder satisfaction, as measured by internal surveys. The company also reported that automated hyperparameter tuning, powered by Ray Tune, cut training iterations by 25%, freeing up data scientists to focus on creative problem‑solving. Strategic deployment ultimately hinges on matching model strengths to organizational needs. For firms prioritizing speed, DDIM offers a compelling trade‑off between quality and latency. Companies that require nuanced control over generation, such as content‑creation studios or AI‑driven advertising agencies, should consider score‑based generative models. Finally, when the paramount goal is unconditional image fidelity—such as in scientific visualization or high‑end e‑commerce—DDPM remains the benchmark, especially when coupled with modern hardware optimisations and efficient training regimes. By aligning diffusion model choice with specific use cases, enterprises can harness the full potential of AI image synthesis while maintaining competitive agility.

Cost-Benefit Analysis: Hardware, Training, and Scalability Trade-Offs

The economic calculus of deploying diffusion models as strategic AI engines extends far beyond raw computational costs, encompassing a complex matrix of hardware efficiency, training methodologies, and long-term scalability. Training a high-end model like Imagen—requiring 256 TPUv4 chips over two weeks at a cost exceeding $1 million—exemplifies the financial barrier to entry. Yet, as the field matures, innovations in hardware utilization are dramatically altering this equation. Mixed-precision training, which leverages lower-bit arithmetic without sacrificing model accuracy, combined with gradient checkpointing and model parallelism, has demonstrated cost reductions of 40–60% across enterprise deployments.

These techniques, now standard in frameworks like PyTorch and TensorFlow, reflect a broader trend in AI infrastructure: squeezing maximum performance from existing hardware rather than relying solely on brute-force scaling. As Dr. Elena Torres, AI systems researcher at Stanford, notes, ‘The future of cost-efficient diffusion models lies not in bigger data centers, but in smarter resource allocation across the training lifecycle.’ Deployment economics present another critical dimension, where edge-optimized diffusion models like MobileDiffusion are redefining the trade-off between quality and accessibility.

By enabling on-device AI image synthesis, these lightweight architectures eliminate cloud latency and bandwidth costs—crucial for applications in mobile design tools, AR/VR, and real-time content personalization. A 2023 Meta case study revealed that on-device denoising score matching models reduced end-user latency by 70% compared to cloud-based DDPM implementations, while maintaining 85% of the visual fidelity. This shift aligns with the broader industry pivot toward hybrid cloud-edge architectures, where computationally intensive training occurs in centralized data centers, but inference is distributed across devices.

The implications are profound: diffusion models are no longer tethered to hyperscale infrastructure, opening new markets in emerging economies and offline environments. Scalability challenges, particularly in cloud-based API services, underscore the need for dynamic resource management. MidJourney’s traffic spikes—peaking at over 50,000 concurrent image generations during viral campaigns—highlight the volatility of demand for AI image synthesis. Traditional static provisioning leads to either underutilized capacity or service degradation. A 2023 AWS case study demonstrated that auto-scaling with Kubernetes, combined with predictive load balancing using reinforcement learning sampling, reduced idle costs by 35% while maintaining sub-second response times.

These systems leverage adaptive noise scheduling to prioritize high-value requests, dynamically allocating GPU resources based on real-time workloads. As Dr. Rajiv Mehta, CTO of AI infrastructure startup Neuronix, observes, ‘The next frontier is not just scaling up, but scaling intelligently—using multi-scale architectures to match computational intensity with user value.’ The quality-cost trade-off is being fundamentally reshaped by model compression and distillation techniques. Recent advances allow 10x smaller diffusion models to achieve 90% of the performance of their larger counterparts, as measured by FID scores and human evaluation.

Google’s 2023 research on score-based generative models showed that knowledge distillation from a 5-billion-parameter teacher model to a 500-million-parameter student model retained 92% of the original’s photorealism. This breakthrough enables enterprises to deploy tiered model strategies: high-fidelity DDPM variants for mission-critical applications like medical imaging, and distilled DDIM models for routine content creation. The economic impact is clear—a financial services firm using this approach reported a 60% reduction in inference costs while maintaining brand consistency across marketing materials.

Long-term strategic planning must also account for the evolving hardware landscape. The rise of AI-specific accelerators, such as Cerebras’ wafer-scale chips and Graphcore’s IPU clusters, is enabling new training paradigms. These architectures, optimized for the iterative denoising process of diffusion models, reduce training times by up to 75% compared to traditional GPUs. Meanwhile, automated hyperparameter tuning tools like Optuna are cutting development cycles by identifying optimal configurations for mixed-precision training and adaptive noise scheduling. A 2024 McKinsey analysis found that enterprises combining these technologies achieved ROI on diffusion model investments 30% faster than those relying on conventional approaches. As the technology matures, the focus is shifting from isolated cost savings to holistic value alignment—investing in cutting-edge models for competitive differentiation while leveraging efficient architectures for operational tasks. This strategic calculus will define the next generation of AI-driven synthesis across industries.

Implementation Roadmaps: Online Learning, Hyperparameter Tuning, and Emerging Tech Integration

To harness diffusion models strategically, organizations need robust implementation roadmaps that address both technical and operational challenges. Online learning frameworks enable models to adapt to new data in real time, crucial for applications like personalized EdTech content, where student feedback continuously refines generation. According to Dr. Lena Chen, AI Research Director at DeepMind, ‘Online learning transforms diffusion models from static artifacts into dynamic systems that evolve with their environment, particularly valuable when denoising score matching must adapt to shifting data distributions.’ This approach allows organizations to maintain model relevance without complete retraining, significantly reducing computational costs while maintaining high-quality outputs that meet evolving user expectations.

Automated hyperparameter tuning has emerged as a critical component in optimizing diffusion model performance, with tools like Optuna and Ray Tune demonstrating remarkable efficiency gains. Unlike traditional neural networks, diffusion models present unique optimization challenges due to their iterative denoising process and sensitivity to noise scheduling parameters. Recent benchmarks from the Stanford HAI Institute indicate that sophisticated tuning approaches can reduce training time by up to 40% while improving sample quality metrics (FID scores) by 15-25%.

A case study from Adobe demonstrates how their implementation of automated hyperparameter tuning in their Firefly diffusion model reduced time-to-market for new artistic style capabilities by six weeks while maintaining competitive quality standards. Integration with emerging technologies continues to push the boundaries of what diffusion models can achieve. Temporal Fusion Transformers (TFTs) have proven particularly effective in extending diffusion capabilities to video generation, creating dynamic content that maintains temporal consistency while allowing for stylistic variation.

Research published at CVPR 2023 shows that TFT-integrated diffusion models can generate four-minute 4K video sequences with 30% fewer artifacts compared to previous approaches. A notable implementation by Netflix uses this technology to create personalized preview trailers that adapt viewer reactions in real-time, demonstrating how these hybrid architectures can transform content creation workflows across entertainment and education sectors. Reinforcement learning, particularly Proximal Policy Optimization (PPO), has revolutionized how diffusion models approach the sampling process, moving beyond traditional DDPM and DDIM approaches.

By training reward models that evaluate output quality, diversity, and adherence to constraints, organizations can guide diffusion models toward optimal results without exhaustive search. Google’s implementation in Imagen Video demonstrates how RL sampling can reduce computational requirements by 60% while maintaining competitive visual quality. As Dr. Fei-Fei Li notes, ‘Reinforcement learning transforms diffusion models from generative engines into strategic problem-solvers that can navigate complex creative constraints while delivering exceptional results.’ Robotic Process Automation (RPA) pipelines have become indispensable for deploying diffusion models at scale, automating everything from data preprocessing to model monitoring and API integration.

These systems address the unique deployment challenges of diffusion models, including their memory-intensive nature and sensitivity to input variations. A case study from Shutterstock reveals how their RPA implementation reduced deployment errors by 85% while cutting operational costs by 30%. The automation extends to continuous monitoring of model performance, with automated retraining triggers based on quality metrics that ensure consistent output as data distributions evolve. Implementing diffusion models effectively requires navigating significant technical hurdles, including memory constraints during training and sampling inefficiencies that limit real-time applications.

Leading organizations are addressing these challenges through innovative implementation strategies that combine model compression techniques with hardware optimization. According to a 2023 survey by NVIDIA, companies that adopted comprehensive implementation roadmaps saw 2.5x faster deployment times and 40% lower operational costs compared to those using ad-hoc approaches. The most successful implementations treat diffusion models not as standalone solutions but as components within broader AI ecosystems, enabling them to leverage complementary technologies like transformer architectures and knowledge distillation.

Emerging integration architectures are creating new possibilities for diffusion models as strategic problem-solving engines. Hybrid approaches that combine diffusion models with variational autoencoders or GANs have shown particular promise in achieving both high fidelity and diversity in generated outputs. Research from MIT demonstrates how multi-scale architectures can address the limitations of single-scale diffusion models, enabling better handling of both fine details and global structure. These innovations are transforming diffusion models from specialized image generation tools into versatile AI frameworks capable of addressing complex challenges across domains, from drug discovery to climate modeling.

As diffusion models become more prevalent, organizations must develop robust governance frameworks that address ethical considerations while maintaining innovation. The implementation roadmap should include bias detection mechanisms, content verification systems, and transparency measures that build user trust. A comprehensive study by Partnership on AI found that organizations with ethical governance frameworks for generative AI experienced 35% fewer incidents of problematic outputs while maintaining competitive performance metrics. Leading firms are implementing these frameworks through a combination of technical solutions, such as adversarial detection trained on diffusion model outputs, and organizational practices, including diverse development teams and ongoing impact assessments.

Future Directions and Real-World Impact: From Scalability to AI-Driven Synthesis

The future of diffusion models lies in overcoming scalability and advancing AI-driven synthesis. Research is focused on reducing sampling steps to single-digit numbers through techniques like consistency models, which promise real-time generation. These models build upon denoising score matching principles but eliminate the need for iterative refinement, potentially revolutionizing applications requiring immediate visual feedback. Scalability challenges, such as distributed training across global data centers, are being addressed by federated learning frameworks that preserve privacy while enabling collaborative model development without centralizing sensitive data.

This approach allows institutions to contribute to model training without compromising proprietary information, a critical advancement for enterprise adoption of diffusion technologies across sectors.

Real-world impact is already evident: in EdTech, platforms like Squirrel AI use diffusion models to generate personalized learning materials, improving student engagement by 40%. In healthcare, models assist in medical image augmentation, addressing data scarcity. Future directions include multimodal integration—combining text, audio, and 3D—and ethical AI frameworks to prevent misuse.

As diffusion models evolve from image generators to strategic problem solvers, their potential to transform industries is limitless. The journey from noise to insight is not just technical but profoundly human, reshaping how we create, learn, and innovate.