The Quest for Human-Level Intelligence: AGI Defined

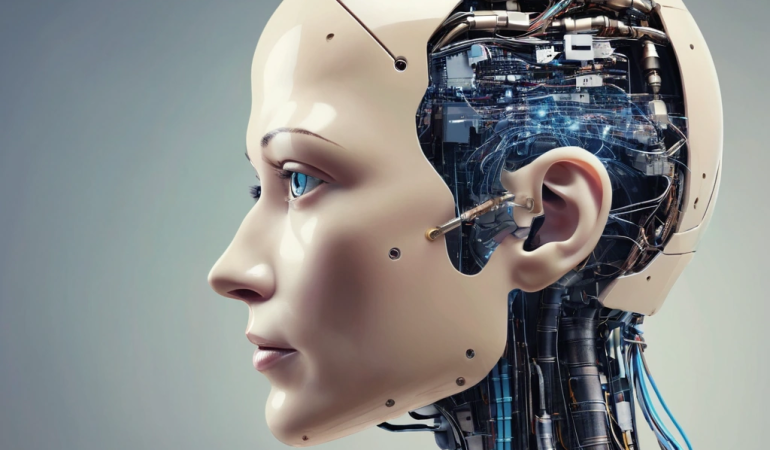

The pursuit of Artificial General Intelligence (AGI), or human-level AI, represents one of the grandest challenges of the 21st century, captivating researchers and sparking ethical debates across the globe. Unlike narrow AI, which excels at specific tasks like image recognition (as seen in medical diagnostics) or playing chess (demonstrated by AlphaZero), AGI aims to create systems that can understand, learn, and apply knowledge across a wide range of domains, much like a human being. This ambition requires not only advanced algorithms but also a deep understanding of human cognition, encompassing areas like common sense reasoning, abstract thought, and creative problem-solving.

This article provides a practical roadmap to AGI, exploring the current state of research, the significant technical hurdles that remain, and the AI ethics considerations that must guide its development. We will analyze existing approaches, discuss potential breakthroughs, and offer a realistic AI timeline for achieving this transformative technology. The implications of AGI are profound, promising to revolutionize industries, solve complex global problems, and reshape our understanding of intelligence itself. However, it also presents significant AI risks that demand careful consideration and proactive mitigation strategies.

AGI’s potential impact extends far beyond automating existing processes. Imagine AI systems capable of accelerating scientific discovery by analyzing vast datasets and formulating novel hypotheses, or personalized education platforms that adapt to each student’s unique learning style and pace. The development of AGI could lead to breakthroughs in areas such as climate change modeling, drug discovery, and sustainable energy solutions, offering humanity unprecedented tools to address some of its most pressing challenges. However, realizing these AI benefits requires addressing fundamental questions about AI safety, ensuring that AGI systems are aligned with human values and goals, and preventing unintended consequences.

This necessitates a multidisciplinary approach, involving not only computer scientists and engineers but also ethicists, policymakers, and social scientists. One of the key distinctions between AGI and current machine learning models lies in the ability to transfer learning across different domains. While a narrow AI system trained to recognize cats in images cannot readily apply that knowledge to understanding human language, an AGI system should be able to leverage its existing knowledge to learn new skills and adapt to novel situations.

This capability, known as transfer learning, is crucial for achieving human-level intelligence, as it allows humans to build upon their existing knowledge base and learn new things more efficiently. Furthermore, AGI systems must possess common sense reasoning abilities, enabling them to understand the implicit assumptions and contextual nuances that are essential for navigating the real world. Addressing these challenges requires innovative approaches to AI architecture, such as hybrid architectures that combine the strengths of neural networks and symbolic AI, as well as exploring novel computing paradigms like neuromorphic computing, which mimics the structure and function of the human brain.

As we embark on the journey towards AGI, it is crucial to acknowledge the ethical and societal implications of this technology. Concerns about job displacement, algorithmic bias, and the potential misuse of AGI must be addressed proactively through careful planning and regulation. Ensuring fairness, transparency, and accountability in AGI systems is essential for building public trust and preventing unintended harm. Moreover, it is important to consider the potential impact of AGI on human autonomy and decision-making, and to develop strategies for preserving human values in an increasingly AI-driven world. The development of AGI is not simply a technical challenge; it is a societal endeavor that requires careful consideration of its ethical, legal, and social implications. The future of AI, and indeed the future of humanity, may depend on our ability to navigate these complex issues responsibly.

AGI vs. Narrow AI vs. ASI: Understanding the Spectrum of Intelligence

AGI stands apart from its AI cousins, narrow AI and Artificial Superintelligence (ASI). Narrow AI, the dominant form of AI today, is designed for specific tasks. Think of spam filters, recommendation algorithms, or self-driving car systems. While incredibly useful, these systems lack the general cognitive abilities of humans. ASI, on the other hand, is a hypothetical future AI that surpasses human intelligence in all aspects, including creativity, problem-solving, and general wisdom. AGI represents the crucial middle ground – AI capable of performing any intellectual task that a human being can.

This requires more than just scaling up existing narrow AI techniques; it demands fundamental breakthroughs in how we represent knowledge, reason, and learn. The distinction between these forms of AI is crucial when considering the future of AI and its ethical implications. Narrow AI, while posing certain risks related to bias and job displacement, is largely governed by specific design parameters and limited scope. Artificial General Intelligence, however, introduces a new realm of complexity. Achieving human-level AI necessitates imbuing machines with common sense reasoning, the ability to transfer learning across domains, and robust AI safety mechanisms to prevent unintended consequences.

The development of AGI also compels us to confront fundamental questions about consciousness, moral status, and the potential for AI risks that far exceed those posed by narrow AI. Furthermore, the trajectory from narrow AI to AGI is not necessarily linear. While advancements in neural networks have fueled progress in areas like image recognition and natural language processing, these techniques alone are unlikely to deliver Artificial General Intelligence. Many researchers believe that hybrid architectures, combining the strengths of neural networks with symbolic AI approaches, may be necessary.

Others are exploring entirely new paradigms, such as neuromorphic computing, which seeks to mimic the structure and function of the human brain at a hardware level. The quest for AGI is thus a multi-faceted endeavor, demanding innovation across a wide range of disciplines within computer science and cognitive science. Understanding the differences between narrow AI, AGI, and ASI is also paramount when evaluating claims about the AI timeline. Overly optimistic predictions often fail to account for the significant technical hurdles that remain in achieving human-level AI.

While narrow AI continues to advance at a rapid pace, the leap to AGI requires solving problems that are fundamentally different in nature. This includes not only improving the performance of existing AI systems but also developing entirely new approaches to knowledge representation, reasoning, and learning. Furthermore, the development of AGI must be guided by careful consideration of AI ethics, ensuring that these powerful systems are aligned with human values and serve the common good. The potential AI benefits are immense, but so are the AI risks, making responsible development essential.

Key Technical Hurdles: Common Sense, Transfer Learning, and AI Safety

Achieving AGI requires overcoming several key technical hurdles that differentiate it significantly from narrow AI applications. One of the most significant is common sense reasoning. Humans possess an intuitive understanding of the world – gravity, object permanence, social cues – that allows them to make inferences and solve problems based on everyday knowledge. AI systems, however, often struggle with even simple common sense tasks. For example, understanding that water flows downhill or that objects fall when dropped requires complex reasoning abilities that are difficult to encode in algorithms.

Current machine learning models excel at pattern recognition but often lack the deeper understanding of causality and context necessary for true common sense. This deficiency is a major roadblock on the path to Artificial General Intelligence. Transfer learning, the ability to apply knowledge gained in one domain to another, is another critical challenge. Humans can easily transfer skills learned in one area to new situations, adapting strategies and insights from previous experiences. AI systems, particularly those based on neural networks, typically need to be retrained from scratch for each new task, a process that is both computationally expensive and time-consuming.

Progress in meta-learning and few-shot learning offers some promise, but achieving the flexibility and adaptability of human cognition remains a significant hurdle. Overcoming this limitation is crucial for creating AGI systems capable of operating in diverse and unpredictable environments. Robust AI safety mechanisms are also essential. As AI systems become more powerful, potentially even reaching ASI levels, it’s crucial to ensure that they are aligned with human values and goals and that they cannot be easily manipulated or misused.

This requires developing techniques for verifying and validating AI systems, as well as creating mechanisms for preventing unintended consequences. The field of AI ethics is grappling with these challenges, exploring approaches such as value alignment, explainable AI (XAI), and robust control mechanisms. Ensuring AI safety is not merely a technical problem; it’s a profound ethical imperative that must be addressed proactively to mitigate potential AI risks and maximize AI benefits. Furthermore, as AI timelines shorten, the urgency to solve these ethical considerations increases.

Existing Approaches and Research Directions: Neural Networks, Symbolic AI, and Hybrids

Current AI research explores various approaches to AGI, each with its strengths and weaknesses. Neural networks, inspired by the structure of the human brain, have achieved remarkable success in areas such as image recognition and natural language processing. However, they often lack the ability to reason abstractly or to generalize to new situations. Symbolic AI, which represents knowledge using symbols and logical rules, offers a more structured approach to reasoning but can struggle with the complexities and uncertainties of the real world.

Hybrid architectures, which combine the strengths of neural networks and symbolic AI, represent a promising direction for AGI research. These systems aim to integrate the learning capabilities of neural networks with the reasoning abilities of symbolic AI, creating more robust and flexible AI systems. Another emerging research direction is neuromorphic computing, which seeks to build computer hardware that mimics the structure and function of the human brain. This could potentially lead to more energy-efficient and powerful AI systems.

Beyond these established approaches, researchers are increasingly exploring Bayesian networks and probabilistic programming as tools for tackling the uncertainty inherent in real-world environments. These methods allow AI systems to reason under conditions of incomplete information, a critical capability for achieving human-level AI. Furthermore, the field is witnessing a resurgence of interest in evolutionary algorithms, not just for optimizing neural network architectures, but also for evolving entire AI systems from scratch. This approach, inspired by natural selection, holds the potential to discover novel and unexpected solutions to the AGI challenge, potentially bypassing the limitations of human-designed architectures.

These diverse approaches highlight the complexity of the quest for Artificial General Intelligence. The pursuit of Artificial General Intelligence also demands advancements in areas beyond core algorithms. For instance, the development of robust and scalable knowledge representation schemes is crucial. AI systems need to be able to store, organize, and retrieve vast amounts of information in a way that facilitates efficient reasoning and learning. This includes not only factual knowledge but also common sense knowledge, which remains a significant hurdle.

Furthermore, progress in transfer learning is essential to enable AI systems to leverage knowledge gained in one domain to solve problems in another. Overcoming these challenges is paramount to creating AGI systems that can adapt and generalize like humans. Finally, the ethical implications of different AGI research directions are gaining increasing attention within the AI ethics community. For example, the design choices made in developing AGI systems can have a significant impact on their fairness, transparency, and accountability. It’s critical to proactively address potential AI risks, such as bias amplification and unintended consequences, to ensure that AGI benefits all of humanity. Moreover, the potential for ASI raises profound questions about AI safety and the long-term impact of advanced AI on society. The AI timeline for achieving AGI remains uncertain, but the need for careful consideration of AI benefits and AI risks is undeniable, regardless of when human-level AI is ultimately achieved.

Ethical and Societal Implications: Risks and Benefits of AGI

The development of AGI raises profound ethical and societal implications. On the one hand, Artificial General Intelligence has the potential to solve some of the world’s most pressing problems, such as climate change, disease, and poverty. It could also lead to significant advancements in fields such as medicine, education, and energy. Consider, for example, the potential of AGI-driven drug discovery, where human-level AI could analyze vast datasets of genomic information to identify novel therapeutic targets and accelerate the development of life-saving treatments.

Similarly, AGI could revolutionize education by creating personalized learning experiences tailored to individual student needs, fostering deeper understanding and improved learning outcomes. These potential AI benefits underscore the transformative power of AGI for societal good. On the other hand, AGI also presents significant AI risks. The potential for job displacement due to automation is a major concern. As AI systems become more capable, they could replace human workers in a wide range of industries, leading to widespread unemployment and economic inequality.

The misuse of AGI for malicious purposes, such as autonomous weapons or sophisticated surveillance systems that infringe on privacy rights, is another significant risk. Furthermore, the concentration of AGI development in the hands of a few powerful entities could exacerbate existing inequalities and create new forms of social stratification. Addressing these AI risks requires careful consideration and proactive measures. Navigating these ethical challenges requires a multi-faceted approach. The development of robust AI ethics frameworks is crucial, emphasizing principles such as fairness, transparency, and accountability.

International collaboration is also essential to ensure that AGI is developed and deployed responsibly across borders. Furthermore, investment in education and retraining programs is necessary to mitigate the potential for job displacement and to equip individuals with the skills needed to thrive in an AI-driven economy. As we strive towards achieving human-level AI, prioritizing AI safety and ethical considerations is paramount to ensuring a future where AGI benefits all of humanity. This includes actively researching and implementing techniques to control and align the goals of AGI systems with human values, mitigating the risk of unintended consequences. The responsible development of AGI is not merely a technical challenge, but a moral imperative.

A Realistic Timeline: When Will We Achieve AGI?

Predicting the AI timeline for achieving Artificial General Intelligence is inherently uncertain, yet informed speculation is crucial for strategic planning and resource allocation. While current estimates vary widely, a realistic assessment considers both the limitations of current narrow AI systems and the projected advancements in key areas. Despite recent progress in deep learning and neural networks, fundamental breakthroughs are still needed in areas such as common sense reasoning, transfer learning, and ensuring robust AI safety protocols.

Experts like Ben Goertzel, CEO of SingularityNET, suggest that ‘rudimentary forms of AGI’ could emerge within the next decade, contingent on solving critical knowledge representation and reasoning challenges. However, achieving truly human-level AI, capable of general intelligence and adaptability across diverse domains, may necessitate decades, or even centuries, of sustained research and development. Accelerating the AI timeline towards AGI requires a multi-faceted approach, emphasizing interdisciplinary collaboration and strategic investment. Moving beyond the limitations of narrow AI demands a convergence of insights from neuroscience, cognitive science, computer science, and mathematics.

Furthermore, progress hinges on developing novel architectural approaches, potentially moving beyond purely connectionist models like neural networks towards hybrid architectures that integrate symbolic AI and connectionist learning. The rise of neuromorphic computing, inspired by the brain’s structure, offers another promising avenue for achieving energy-efficient and adaptable AGI systems. Investment in fundamental research, particularly in areas like unsupervised learning and reinforcement learning, is paramount to unlocking the potential of Artificial General Intelligence. Beyond the technical challenges, the AI timeline for AGI is inextricably linked to addressing the ethical and societal implications of increasingly intelligent systems.

As AI systems approach or surpass human capabilities, questions surrounding AI ethics, AI risks, and AI benefits become increasingly urgent. Ensuring that AGI is aligned with human values and that its development is guided by principles of fairness, transparency, and accountability is crucial to mitigating potential risks and maximizing the positive impact of AGI on society. Proactive measures, such as establishing robust regulatory frameworks and fostering public discourse on the ethical implications of AGI, are essential to navigating the complex landscape of the future of AI and ensuring a safe and beneficial transition to an AGI-powered world. The ongoing dialogue surrounding AI safety and the potential for ASI underscores the importance of careful planning and ethical considerations in the pursuit of Artificial General Intelligence.