The Algorithmic Takeover: Generative AI’s Impact on Stock Trading

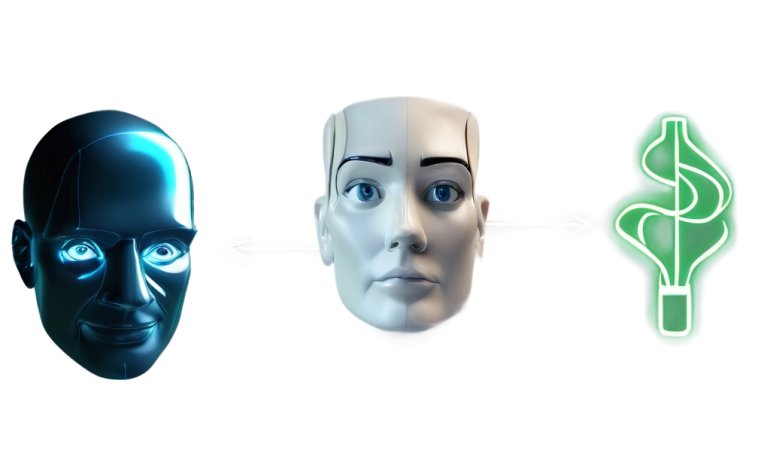

The rise of generative artificial intelligence (AI) is rapidly transforming the financial landscape, particularly in stock trading. Once the domain of human analysts and gut instincts, the market is now increasingly influenced by algorithms capable of processing vast datasets and executing trades at speeds that were previously unimaginable. While these advancements promise increased efficiency and potentially higher returns, they also introduce a complex web of ethical dilemmas that demand careful consideration. This article delves into the evolving landscape of financial ethics in the age of generative AI, specifically focusing on stock trading, and aims to provide financial professionals, regulators, and investors with a comprehensive understanding of the challenges and potential solutions.

The integration of generative AI in stock trading necessitates a re-evaluation of AI ethics in finance, moving beyond traditional regulatory frameworks to address novel risks. Algorithmic trading, now responsible for an estimated 60-80% of equity trading volume in the U.S. alone, exemplifies this shift. These algorithms, often powered by sophisticated machine learning models, can identify and exploit fleeting market inefficiencies with superhuman speed. However, the increasing reliance on these ‘black box’ systems raises critical questions about algorithmic trading ethics.

For instance, the flash crash of 2010, where the Dow Jones Industrial Average plunged nearly 1,000 points in a matter of minutes, served as a stark reminder of the potential for unintended consequences when complex algorithms interact in unpredictable ways. The need for greater transparency in AI trading and robust risk management protocols is therefore paramount. Generative AI’s capacity to create synthetic data and simulate market scenarios further complicates the ethical landscape. While these simulations can be valuable tools for stress-testing trading strategies and identifying potential vulnerabilities, they also open the door to sophisticated forms of market manipulation.

Imagine, for example, an AI generating fake news articles designed to trigger specific market reactions, allowing the algorithm’s creators to profit from the ensuing volatility. Addressing these challenges requires a multi-faceted approach, including enhanced regulatory oversight, the development of explainable AI (XAI) techniques that can shed light on algorithmic decision-making, and a commitment to accountability for AI errors in finance. Furthermore, the concentration of advanced AI capabilities within a small number of large financial institutions creates an uneven playing field.

Smaller firms and individual investors may lack the resources to compete with these AI-powered behemoths, potentially exacerbating existing inequalities in the market. This raises fundamental questions about fairness and access in the age of generative AI stock trading. Regulators must consider implementing measures to promote a more level playing field, such as requiring firms to share anonymized data or providing access to AI training resources for smaller market participants. Ultimately, the responsible deployment of AI in finance requires a collaborative effort involving regulators, financial institutions, and technology developers, all working together to ensure that these powerful tools are used in a way that benefits society as a whole.

AI in Action: Current Applications in Stock Trading

Generative AI tools are being deployed across various facets of stock trading. Algorithmic trading, powered by AI, now accounts for a significant portion of market volume. These algorithms analyze historical data, real-time market trends, and even news sentiment to identify profitable trading opportunities. Market analysis is also being revolutionized, with AI capable of generating insightful reports and predictions that would take human analysts weeks to produce. Furthermore, AI is being used for fraud detection, identifying suspicious trading patterns and potentially preventing market manipulation.

For example, several firms are using AI to analyze communication patterns of traders to detect potential collusion or insider trading, flagging anomalies for human review. However, this widespread adoption also brings significant risks. In 2010, the ‘Flash Crash’ demonstrated the potential for algorithmic trading to destabilize markets, highlighting the need for robust safeguards. The increasing reliance on generative AI in stock trading necessitates a deeper examination of AI ethics in finance. Algorithmic trading ethics demands that we consider the potential for bias in training data, which can lead to discriminatory outcomes or unintended market manipulation.

For instance, if an AI is trained primarily on data from a bull market, it may perform poorly and even exacerbate losses during a downturn. Addressing these ethical considerations requires careful data curation, robust testing, and ongoing monitoring of AI performance to ensure fairness and prevent unintended consequences. The development of generative AI stock trading tools must therefore prioritize ethical design principles from the outset. Transparency in AI trading is paramount to maintaining market integrity and investor confidence.

The opacity of many AI algorithms makes it difficult to understand how trading decisions are made, raising concerns about accountability for AI errors in finance. When an AI-driven trading system causes significant financial losses, it can be challenging to determine the root cause and assign responsibility. This lack of transparency undermines trust in the market and creates opportunities for unethical behavior. Regulatory bodies are beginning to explore ways to increase transparency in algorithmic trading, such as requiring firms to disclose the basic logic and parameters of their AI systems.

Greater transparency would allow for better oversight and help to prevent future market disruptions. Moreover, the speed and scale at which AI algorithms operate raise concerns about their potential to amplify market volatility. While AI can quickly identify and exploit trading opportunities, it can also trigger rapid-fire trading cascades that destabilize prices. The ‘Flash Crash’ served as a stark reminder of this risk, highlighting the need for robust risk management controls and circuit breakers to prevent algorithmic trading from spiraling out of control. As AI becomes increasingly sophisticated, regulators must adapt their oversight mechanisms to keep pace with the evolving technological landscape and ensure that the benefits of AI in finance are not outweighed by the risks.

The Black Box Problem: Transparency and Accountability

One of the most pressing ethical challenges in the burgeoning field of generative AI stock trading is the inherent lack of transparency in AI-driven trading decisions. Many algorithms operate as ‘black boxes,’ inscrutable even to their creators, making it difficult to understand, let alone audit, how they arrive at specific trading decisions. This opacity raises profound concerns about fairness and accountability, striking at the heart of AI ethics in finance. When an AI algorithm makes an erroneous or biased trade, assigning responsibility becomes a complex legal and ethical quagmire.

Is it the programmer who designed the algorithm, potentially embedding biases in the code? The firm that deployed it, perhaps without adequate testing or oversight? Or, in a more dystopian scenario, is the AI itself to blame, having learned and adapted in unpredictable ways? The complexity is further compounded by the fact that generative AI, unlike traditional rule-based systems, can evolve and adapt over time, learning from data and modifying its strategies. This dynamic nature makes it exceedingly difficult to predict its future behavior or to guarantee that it will adhere to pre-defined ethical guidelines.

The Knight Capital Group incident in 2012, where a faulty algorithm caused a staggering $440 million loss in just 45 minutes, serves as a stark and enduring reminder of the potential financial consequences of algorithmic errors. However, the rise of generative AI amplifies these risks exponentially, as the algorithms become more autonomous and less predictable. This necessitates a re-evaluation of existing regulatory frameworks to address algorithmic trading ethics. Furthermore, the ‘black box’ nature of these algorithms directly impedes regulatory oversight.

Regulators struggle to assess whether AI trading systems comply with existing laws against market manipulation or insider trading when the decision-making processes are opaque. The SEC, for example, faces a daunting task in ensuring market integrity when algorithms can execute thousands of trades per second based on factors that are difficult to discern or quantify. This lack of transparency also undermines investor confidence, as individuals may be hesitant to participate in markets where they perceive an unfair advantage held by those with access to sophisticated, yet inscrutable, AI-driven trading tools. Addressing transparency in AI trading is therefore paramount for maintaining fair and efficient markets, and for fostering trust in the financial system.

Exacerbating Inequality: AI and Market Manipulation

The potential for AI to exacerbate existing inequalities in the financial markets is a significant ethical fault line. Sophisticated AI tools, demanding substantial computational power and specialized expertise, are often accessible only to large institutions and hedge funds, creating an uneven playing field. This disparity grants these entities an unfair advantage over smaller investors and retail traders, potentially leading to a concentration of wealth and market power in the hands of a few, further widening the gap between the financially secure and those struggling to participate.

This raises critical questions about AI ethics in finance and the equitable distribution of technological benefits. Moreover, generative AI stock trading introduces novel avenues for market manipulation, demanding proactive regulatory oversight. Algorithms could be designed to subtly exploit vulnerabilities in market regulations, engage in predatory trading practices, or even generate and disseminate misinformation to artificially influence stock prices. The speed and scale at which AI can operate makes these manipulations particularly challenging to detect and counteract.

The GameStop short squeeze in 2021, while largely driven by human actors, serves as a cautionary tale, highlighting the potential for coordinated market activity to destabilize markets; generative AI could amplify such efforts exponentially, necessitating robust mechanisms for transparency in AI trading and real-time monitoring of algorithmic behavior. Accountability for AI errors in finance is also paramount. When an AI algorithm makes a trading error that results in significant financial losses for others, determining responsibility becomes complex.

Is it the programmer who wrote the code, the firm that deployed the algorithm, or the AI itself? Current regulatory frameworks often struggle to address these novel scenarios, creating a vacuum where unethical behavior can flourish. Establishing clear lines of accountability, coupled with rigorous testing and validation protocols for algorithmic trading ethics, is crucial to maintaining market integrity and investor confidence. This includes developing standards for explainable AI (XAI) that can provide insights into the decision-making processes of these complex systems.

Toward Ethical AI: Solutions and Regulatory Frameworks

To foster transparency, accountability, and fairness in the deployment of generative AI in stock trading, a multi-pronged approach encompassing practical solutions and robust regulatory frameworks is essential. Regulators must mandate that firms disclose the fundamental principles and objectives underpinning their AI algorithms. This transparency in AI trading allows for rigorous scrutiny, enabling the identification of potential biases, vulnerabilities, or unintended consequences that could disadvantage certain market participants or destabilize the system. For instance, an algorithm designed to front-run large orders, even subtly, could be detected and addressed before causing widespread harm.

This proactive oversight is crucial for maintaining market integrity and investor confidence. Establishing clear lines of accountability is equally paramount. Firms must be held responsible for the actions of their AI algorithms, even when the intricate workings of these ‘black boxes’ remain partially opaque. This accountability for AI errors in finance necessitates the development of robust risk management frameworks and internal controls. Consider a scenario where an AI algorithm triggers a flash crash due to a flawed trading strategy.

The firm deploying the algorithm should be held liable for the resulting losses, incentivizing them to invest in thorough testing, validation, and ongoing monitoring of their AI systems. Insurance mechanisms and clearly defined legal recourse for affected parties are also vital components of this accountability structure. Furthermore, independent audits of AI algorithms should be conducted regularly to ensure their fair and ethical operation. These audits, performed by qualified experts with a deep understanding of both AI ethics in finance and financial markets, should assess the algorithms’ compliance with regulatory guidelines, their potential for discriminatory outcomes, and their overall impact on market stability. The European Union’s AI Act represents a significant step towards regulating high-risk AI systems, including those used in finance. However, more specific regulations tailored to the unique challenges of algorithmic trading ethics and generative AI stock trading are needed. These regulations should address issues such as data privacy, algorithmic bias, and the potential for market manipulation, creating a level playing field for all investors and promoting responsible innovation in the financial sector.

Explainable AI and the Future of Responsible Trading

One promising approach is the development of ‘explainable AI’ (XAI) techniques. XAI aims to make AI algorithms more transparent and understandable, allowing users to see how they arrive at specific decisions. This could help to build trust in AI systems and make it easier to identify and correct errors. Another solution is the use of AI to monitor AI. Algorithms could be designed to detect anomalies in the behavior of other algorithms, providing an early warning system for potential problems.

Furthermore, education and training are crucial. Financial professionals need to be educated about the ethical implications of AI and trained to use these tools responsibly. Investors also need to be aware of the risks and limitations of AI-driven trading. The pursuit of transparency in AI trading necessitates a multi-faceted approach, combining technological innovation with robust regulatory oversight. Regulators are beginning to explore frameworks that demand greater clarity from financial institutions regarding their algorithmic trading practices.

For instance, the SEC is considering enhanced reporting requirements for firms utilizing generative AI stock trading, pushing for a deeper understanding of the models’ decision-making processes. This proactive stance aims to foster accountability for AI errors in finance and mitigate potential market manipulation. Addressing AI ethics in finance also requires a shift in how algorithms are designed and evaluated. Algorithmic trading ethics should be embedded in the development lifecycle, from initial design to deployment and ongoing monitoring.

Independent audits and ethical reviews can help to identify and address potential biases or unintended consequences. Moreover, incorporating diverse perspectives into the development process can help to ensure that algorithms are fair and equitable. This proactive approach is essential for building trust in AI-driven financial systems and preventing the exacerbation of existing inequalities. Real-world examples highlight the urgency of these considerations. The ‘flash crash’ of 2010, while not solely attributable to AI, demonstrated the potential for algorithmic trading to destabilize markets rapidly. More recently, concerns have been raised about AI-powered systems that may exploit subtle market inefficiencies to the detriment of individual investors. As generative AI becomes more sophisticated, the need for robust ethical guidelines and regulatory frameworks becomes even more critical. The future of responsible trading hinges on our ability to harness the power of AI while mitigating its inherent risks.

Navigating the Future: A Call for Ethical AI in Finance

The integration of generative AI into stock trading presents both immense opportunities and significant ethical challenges. By proactively addressing issues of transparency in AI trading, accountability for AI errors in finance, and fairness, we can harness the power of AI to create a more efficient and equitable financial market. This requires a collaborative effort between regulators, financial institutions, and technology developers to establish clear ethical guidelines and robust regulatory frameworks. The future of finance hinges on our ability to navigate this complex landscape responsibly, ensuring that AI serves as a tool for progress, not a source of inequality and instability.

The ongoing debate and evolution of these systems will shape the financial world for decades to come. Addressing algorithmic trading ethics requires a multi-pronged approach. Regulators, such as the SEC and CFTC, must develop specific guidelines for AI deployment in financial markets, focusing on preventing market manipulation and ensuring fair access to information. For example, mandating pre-trade risk assessments for AI algorithms and implementing circuit breakers that automatically halt trading when unusual patterns are detected can mitigate potential systemic risks.

Furthermore, fostering collaboration between regulatory bodies and AI developers is crucial to create adaptable frameworks that keep pace with technological advancements. The goal is to establish a regulatory environment that promotes innovation while safeguarding market integrity and investor protection. Explainable AI (XAI) offers a pathway to enhance transparency in AI trading. By making AI decision-making processes more understandable, XAI can build trust and facilitate accountability. Financial institutions should invest in developing and implementing XAI techniques to provide insights into how algorithms arrive at specific trading decisions.

This includes visualizing decision pathways, identifying key factors influencing trades, and quantifying the uncertainty associated with predictions. Moreover, independent audits of AI algorithms should be conducted regularly to verify their fairness and accuracy. These audits can help identify biases or vulnerabilities that could lead to discriminatory or erroneous outcomes, ensuring that AI systems align with ethical principles and regulatory requirements. Ultimately, fostering AI ethics in finance requires a commitment to ongoing education and awareness. Financial professionals, regulators, and the public must be educated about the potential risks and benefits of generative AI in stock trading. This includes training programs on AI ethics, workshops on responsible AI development, and public awareness campaigns to promote informed decision-making. By cultivating a culture of ethical awareness, we can ensure that AI is used responsibly and effectively in the financial markets. The convergence of AI technology and financial regulation will continue to evolve, demanding constant vigilance and adaptation to maintain a fair and stable market environment.